Privacy-Preserving & AI Federated Learning: Exploring OpenFL, CrypTen, PySyft, TensorFlow Privacy, and Cloud Provider SDKs

Securing sensitive data during AI training is non-negotiable for most organizations. Whether you’re fine-tuning large language models (LLMs) or training a model on proprietary data, privacy and security cannot be an afterthought. The AI community has developed several open-source frameworks and cloud solutions that make it possible to build privacy-preserving machine learning systems.

In this blog post, I will go over five promising tools and frameworks:

- OpenFL

- CrypTen

- PySyft

- TensorFlow Privacy

- Federated Learning SDKs by Cloud Providers

We’ll discuss what each offers, how they work, and where they might fit into your privacy-first AI workflow.

OpenFL

OpenFL is an open-source federated learning framework designed to enable collaborative training across multiple parties without sharing raw data. Originally developed by Intel and now part of the Linux Foundation, OpenFL provides a flexible and extensible platform for orchestrating federated learning (FL) environments.

It provides:

- Decentralized Training: OpenFL allows multiple participants — such as hospitals, banks, or other organizations — to jointly train a model while keeping their data local.

- Customizable Workflows: With a modular architecture, you can define custom training loops, aggregation protocols, and privacy-preserving mechanisms.

- Secure Aggregation: OpenFL supports secure aggregation techniques that ensure individual contributions remain private even during model updates.

- Industry Adoption: Designed for collaboration, OpenFL has been used in sensitive fields like healthcare and finance, where data privacy is non-negotiable.

But Again, What is Federated Learning?

This video does a really good job:

Core Components of OpenFL

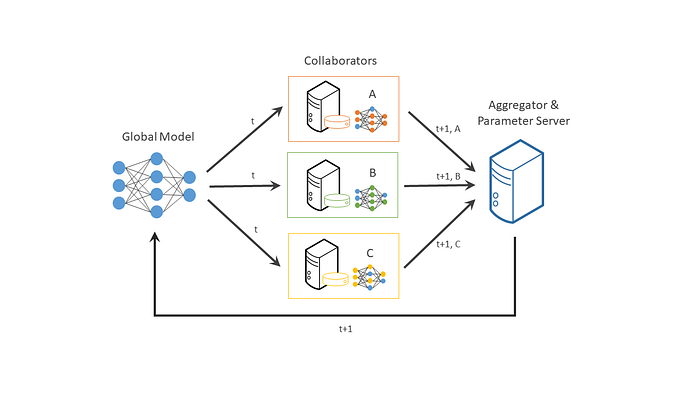

OpenFL’s core components are designed to deliver a federated learning experiment through short-lived services that perform specific roles. Look at the architecture below:

The Aggregator acts as the central orchestrator — it collects model updates from different Collaborators and combines them to form a global model. It essentially works as a parameter server during each training round and is responsible for executing the model aggregation logic, which can be customized using plugins. The Aggregator is only active for the duration of an experiment, ensuring that it fulfills its role without being permanently deployed.

On the other hand, Collaborators are the workhorses that handle local training using their own datasets. They convert data from deep learning frameworks like TensorFlow or PyTorch into OpenFL’s internal tensor representation, execute assigned training tasks, and exchange model parameters with the Aggregator. Each Collaborator is an independent service that loads a specific shard of the overall dataset and sends its locally trained weight tensors to the central node for aggregation. Together, these components enable a secure, modular, and framework-agnostic federated learning environment where participants can collaboratively train a model without sharing raw data.

You can find more information about these components here.

Use Cases

For example, hospitals and healthcare organizations can collaborate to develop diagnostic models without exposing patient data. Banks can jointly create fraud detection models while keeping customer transaction details confidential. Tech companies can potentially use their customer data to generate synthetic data to train their models.

Check out the OpenFL documentation for guides on installation, setting up federated networks, and customizing your training pipelines.

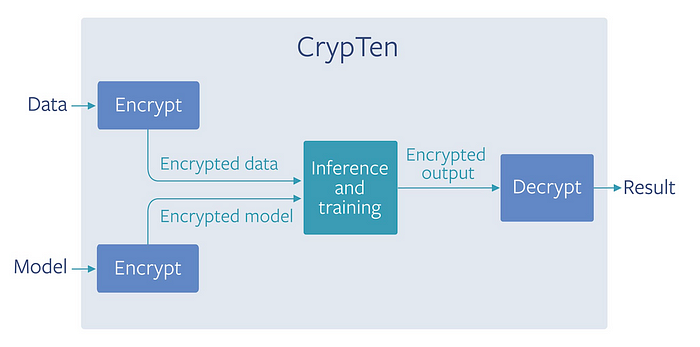

CrypTen: Secure Multiparty Computation for Deep Learning

CrypTen is an innovative framework from Meta (Facebook) designed to facilitate secure multiparty computation (MPC) in PyTorch. CrypTen empowers teams to perform computations on encrypted data without ever exposing the underlying values.

By leveraging MPC protocols, CrypTen allows multiple parties to jointly compute functions over their inputs while keeping those inputs private.

A CrypTensor is basically a secret or encrypted version of a regular tensor (like the ones you use in PyTorch). Imagine you have a bunch of numbers stored in a grid (a tensor), and you want to perform math on these numbers without anyone ever seeing them. CrypTensor lets you do exactly that by keeping the numbers hidden (encrypted) while still letting you add, multiply, or run more complex operations on them.

Encrypted Data Structure:

It works just like a normal PyTorch tensor but its values are kept secret. Only those with the proper key or through a secure process can reveal (or “decrypt”) the real numbers.

Operations:

You can perform many common tensor operations (like addition, subtraction, multiplication, matrix multiplication, pooling, etc.) on a CrypTensor. Even though the numbers are encrypted, the operations work as if you were dealing with plain numbers.

Secure Computation:

All these operations are done using secure multi-party computation (MPC). This means that even if someone tries to peek at the data during computation, they only see scrambled, meaningless information.

Autograd Support:

CrypTensor also supports automatic differentiation (autograd), which is used for training neural networks. You can calculate gradients and perform backpropagation without ever revealing your underlying data.

CrypTensor lets you do the same math you’re used to, but in a way that protects your sensitive data. This is especially useful in privacy-preserving machine learning, where you want to train models on secret or sensitive data without exposing that data to anyone else.

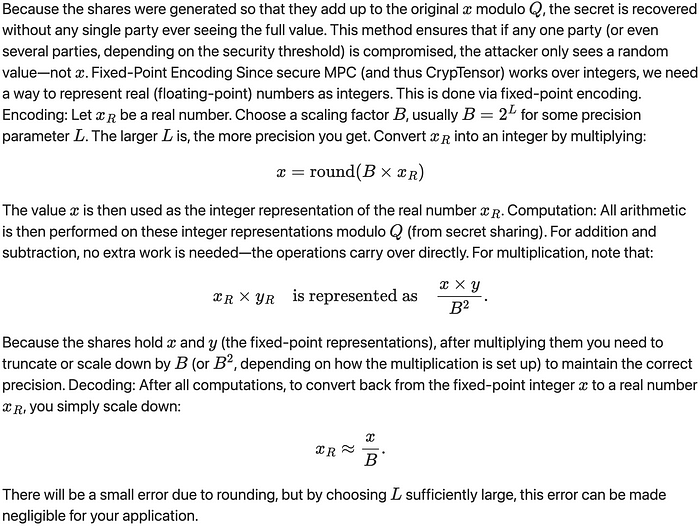

Secret Sharing

CrypTensor relies on “secret sharing” to keep numbers hidden. Imagine you have a secret number that you split into several “shares” and distribute them to different parties. No single party can figure out the secret on its own — the original number is only recovered when all (or a majority) of the shares are combined (using arithmetic modulo a big number). This is known as arithmetic secret sharing.

Here’s how it works mathematically:

Setup:

- Let x be a secret number.

- Assume we have n parties.

- Choose a large modulus Q (often a power of 2, e.g. 2^64) so that all arithmetic is done modulo Q.

Sharing Process

Note: Medium currently doesn’t have native support for mathematical notation and formulas. This limitation makes it difficult to publish scientific and mathematical content on the platform, as precise mathematical formatting cannot be done in Medium’s simple editor. However, I am including them as images below:

For more details, you can check out the CrypTen documentation on CrypTensor operations.

PySyft

PySyft is an open-source Python library designed to extend popular deep learning frameworks (like PyTorch and TensorFlow) with privacy-preserving features. Developed by the OpenMined community, PySyft is your gateway to federated learning, differential privacy, and encrypted computation. It supports:

- Federated Learning: Easily distribute your model training across multiple devices or servers, ensuring that raw data remains local.

- Differential Privacy Integration: Built-in support for differential privacy helps protect against data leakage during training.

- Encrypted Computation: Through techniques like multi-party computation (MPC), PySyft allows you to perform calculations on encrypted data.

- Seamless API: Leverages familiar APIs from popular deep learning frameworks, making it accessible to those already versed in PyTorch or TensorFlow.

Check out the PySyft GitHub repository for installation instructions, tutorials, and community forums to help you integrate privacy features into your AI projects. They also have some good tutorials here.

TensorFlow Privacy

TensorFlow Privacy is an open-source library that integrates with TensorFlow to help developers train models with differential privacy guarantees. It’s a good tool for organizations that need formal, mathematically proven privacy assurances for their AI models.

It provides modified versions of standard optimizers that incorporate differential privacy during gradient descent. It also provide tools for tracking the cumulative privacy loss (the privacy budget), ensuring that your model meets specified privacy parameters (ε, δ).

It is also designed to plug into existing TensorFlow training loops with minimal changes to your code. It’s also backed by Google and is widely adopted in both academia and industry, with extensive documentation and active community contributions.Getting Started

Visit the TensorFlow Privacy documentation for tutorials, API references, and sample projects to see how you can incorporate differential privacy into your TensorFlow models.

Federated Learning SDKs by Cloud Providers:

Major cloud providers are now offering federated learning solutions as part of their broader AI and machine learning platforms. These SDKs are designed to help you leverage the cloud’s scale while ensuring data privacy.

Cloud providers like Google provide managed services or SDKs that streamline the setup of federated learning. These platforms handle many of the hassle of distributed training, secure aggregation, and communication.

These SDKs are designed to work with other cloud-native services — data storage, compute instances, identity management, and monitoring — so you can build a secure end-to-end pipeline.

Check out the respective documentation from:

- Google TensorFlow Federated (TFF): TensorFlow Federated

- Amazon SageMaker Federated Learning: AWS provides guidance within their documentation. Check out this blog for more information.

These cloud SDKs offer a managed path to implementing federated learning at scale without the need to reinvent the wheel on distributed training, allowing you to focus on model quality and privacy.

As you can see, you can leverage these tools to build AI systems that respect user privacy, comply with global regulations, and still deliver the high performance required in today’s competitive market.