AI Agentic Cybersecurity Tools: Reaper, TARS, Fabric Agent Action, and Floki

What are agentic applications in cybersecurity? Systems that can autonomously perceive, decide, and act on security tasks. Recent open-source projects are pushing this frontier by integrating AI models and automation into security workflows. Let’s explore four tools (Reaper, TARS, Fabric Agent Action, and Floki) that are true examples of open source AI agentic systems for cybersecurity.

I will go into the technical details of each tool, their primary use cases, standout features, and how they advance autonomous security.

Reaper — Autonomous Application Security Testing

Reaper (by Ghost Security) is an open-source framework for modern application security testing, engineered to work alongside AI agents. Written in Go and released under Apache-2.0, Reaper unifies the full web app pentesting workflow — from reconnaissance to exploitation — into a single platform.

In essence, Reaper combines functionality akin to tools like Subfinder (recon), Burp/ZAP (proxying and fuzzing), and more, but “wields a scythe where others use scissors” by integrating all phases in one tool. It features a sleek UI for intercepting and modifying requests, collaborative testing, and reporting results.

There demo video is pretty cool:

Primary Use Cases: Reaper is designed for web application security assessments, including:

- Penetration Testing & Bug Bounties: Automates tedious recon and fuzzing tasks, helping pentesters find vulnerabilities faster. It performs intelligent domain reconnaissance, intercepts HTTP traffic, and replays or modifies requests to probe for weaknesses.

- Application Security (AppSec) Analysis: Security analysts can use Reaper to continuously scan applications in a streamlined workflow. Reaper validates vulnerabilities and even generates detailed remediation reports, which is useful for DevSecOps integration.

- AI-Assisted Security Testing: When an AI agent is connected, Reaper accepts natural language prompts (e.g. “Check for broken access control on this domain”) and autonomously executes a targeted test plan. The LLM agent uses Reaper’s capabilities as tools — performing scans or fuzzing — then analyzes the results to produce an actionable report.

Strengths & Unique Features: Reaper’s strength lies in its all-in-one approach and AI readiness. Key features include:

- End-to-End Testing Workflow: Combines reconnaissance, request proxying, tampering/replay, active fuzz testing, and vulnerability validation in one interface. This eliminates context-switching between tools.

- AI Integration: Built to be “usable by humans and AI Agents alike”, Reaper treats LLMs as teammates that never sleep. The AI agent can tune test parameters, identify likely vulns, and summarize findings at machine speed. In initial release, the agent conducts context-aware fuzzing (guided by recon data) and generates a comprehensive report with remediation guidance.

- Live Collaboration: Supports multiple users (and an AI) working simultaneously, with shared context. This real-time collaboration is a differentiator for team-based testing, allowing coordinated discovery and validation of issues.

- Reporting & Guidance: Reaper auto-generates detailed reports. With AI, these reports include explanations of each finding and suggested fixes, bridging the gap from raw scan output to actionable insight.

Reaper runs as either a Docker container (recommended) or as a binary and is controlled by humans using the local web UI. Running it in Docker:

docker run -t --rm \

-e HOST=0.0.0.0 \

-e PORT=8000 \

-e PROXY_PORT=8080 \

-e OPENAI_API_KEY=sk-your-key-here \

-p 8000:8000 \

-p 8080:8080 \

ghcr.io/ghostsecurity/reaper:latestTARS — AI-Powered Pentesting Automation

TARS (Threat Assessment & Response System) is an open-source project aiming to “automate parts of cybersecurity penetration testing using AI agents.” Developed originally as an MVP for a cybersecurity startup (Osgil) in early 2024, it was open-sourced in August 2024 to help the broader security community. TARS is built primarily in Python and uses a Streamlit UI for an easy start (with plans to migrate to a more robust React front-end). Under the hood, it acts as an orchestrator that allows an AI agent to interface with a suite of traditional pentesting tools. TARS is geared towards automating many steps of a pentest or vulnerability assessment:

- Multi-Tool Orchestrated Scanning: TARS can launch and manage popular security scanners. As of now it supports tools like OWASP Nettacker (vulnerability scanning), RustScan (fast port scanning), OWASP ZAP (web app scanning), Nmap (network mapping), and even basic Linux commands. The agent can decide which tool to run based on the target and phase of testing, chaining results from one tool into another (e.g., using Nmap results to feed ZAP).

- Autonomous Reconnaissance: With web browsing capability via the Brave Search API, TARS can perform reconnaissance like looking up target info or crawling web pages for intel. This helps it gather context before launching scans or to verify findings (similar to how a human tester might manually investigate a finding).

- Vulnerability Analysis & Reporting: TARS doesn’t stop at running scanners — its AI agent parses the output to identify actual vulnerabilities. The long-term vision is that it will not only report issues but also suggest fixes or even apply patches where possible. Currently, it can aggregate findings from multiple tools and present them, potentially with the LLM summarizing the severity and remediation steps.

Fabric Agent Action — Workflow Automation with Fabric Patterns

Fabric Agent Action is a GitHub Action that brings the power of Daniel Miessler’s Fabric framework into CI/CD workflows through an agent-based approach. In simpler terms, it allows you to automate complex tasks in your repository by invoking AI agents that follow predefined “patterns.” The action is built in Python using LangGraph, a library on top of LangChain for orchestrating multi-step LLM operations.

Fabric Agent Action intelligently selects and executes Fabric Patterns using an LLM, acting as a brains of the operation in GitHub Actions. This means you can embed an autonomous agent in your DevSecOps pipeline — whether to analyze issues, review pull request content, or perform any scripted workflow — all driven by natural language instructions and AI. It’s essentially an interface between GitHub events (like an issue comment or code push) and the Fabric “patterns” (reusable prompt workflows) that define what the AI should do in response.

Fabric Agent Action is quite flexible; its use cases span automation for developers and project maintainers, including some security-focused scenarios:

- Issue Handling and Q&A: By listening to issue comments, the action can trigger an AI agent to perform tasks like answering a question or formatting content. For example, a user can comment “/fabric summarize this issue” and the agent will gather the issue’s context and reply with a summary. A flowchart in the documentation illustrates how an issue comment command leads the agent to read context, possibly apply a text-cleaning pattern, and post a response. This is useful for documentation or triaging in security projects where common questions can be answered by an AI agent.

- Pull Request Review & Improvement: One specialized agent type (

react_pr) is designed for pull request analysis. This could be used to automate code review tasks – e.g., checking a PR for security hotspots or improving code comments. An example given shows the agent taking a prompt to “improve writing of cleaned text” on a PR, then analyzing the PR’s diff and comments to produce an output. In a security context, you might craft a Fabric pattern to scan the PR diff for usage of dangerous functions or insecure configurations, and the agent could comment with findings. - Automated Workflows & DevOps Tasks: Any complex workflow that has been encoded as a Fabric pattern can be run. This might include things like cleaning up data, generating documentation, or running custom scripts triggered by repository events. Since Fabric itself is a general AI augmentation framework, you could integrate patterns for cloud security checks, dependency audits, or vulnerability scanning on new code. The key is that the agent can route to the correct pattern based on the instruction, functioning like an autonomous decision-maker in your CI pipeline.

- ChatOps in Repositories: The action essentially enables ChatOps with an AI agent. Team members can interact with the repo by issuing commands (prefixed with

/fabric) in comments, and the AI will execute the request. This could even extend to security bots – e.g., a command to run a static analysis and return results could be implemented as a pattern, giving developers on-demand security scans via chat command.

As a GitHub Action, it can be dropped into any repository’s workflow with minimal setup. There’s no need to stand up separate infrastructure — it runs in the context of your CI. This lowers the barrier to adding AI automation to projects. You can trigger it on pull requests, pushes, comments, schedule, etc., making it very flexible.

Recognizing that not everyone will use the same AI provider, the action supports OpenAI’s API, Anthropic’s API, or OpenRouter as backends.

Floki — Framework for LLM-Powered Autonomous Agents

Floki is an open-source framework by Roberto Rodriguez (aka Cyb3rWard0g) for building and experimenting with LLM-based autonomous agents at scale. Unlike the other tools discussed, Floki is not a point-solution for a specific task — it’s a general framework to create and orchestrate multi-agent systems, with an emphasis on robust workflows and inter-agent communication. Floki is implemented in Python and heavily utilizes Dapr (Distributed Application Runtime) to handle the hard parts of distributed systems engineering. By building on Dapr’s sidecar architecture, Floki provides a unified programming model for agents that supports both sequential, deterministic logic and event-driven, asynchronous interactions.

It uses Dapr’s Virtual Actor model to treat each agent as an independent, stateful unit that processes messages one at a time — simplifying concurrency and scalability issues in multi-agent setups. In essence, Floki gives researchers and developers a sandbox to deploy multiple AI agents that can talk to each other and work together on complex tasks, all backed by a reliable microservices infrastructure.

Floki is a foundation to build custom agent-driven applications, including many potential cybersecurity scenarios:

- Automated Threat Hunting & Analysis: One could design a system of agents where each has a specialty — e.g., one agent monitors logs for anomalies, another agent (LLM) investigates an anomaly (pulls in external data or correlates with threat intel), and a third agent recommends or executes a containment action. Floki’s pub/sub messaging (provided by Dapr) would allow the log-monitor agent to publish an event that the analysis agent subscribes to. This kind of complex workflow, requiring reliable communication and state (to remember context of an investigation), is where Floki shines.

- Multi-Agent Red Team Simulations: Floki could be used to coordinate a team of offensive AI agents in a controlled environment. For example, one agent could scan a target, a second agent analyzes the scan for vulnerabilities, a third agent attempts exploitation, and a fourth documents the findings — all agents sharing information via the message bus. Using Floki, these agents can run concurrently yet safely without stepping on each other’s toes, thanks to the virtual actor model.

- Security Operations Automation: Envision an autonomous SOC where multiple AI agents handle tier-1 triage, threat intelligence correlation, and incident response. Floki provides the scaffolding: agents with different roles (monitoring, analysis, decision, remediation) can maintain state (via Dapr’s state API) and call services (using Dapr’s service invocation) in a fault-tolerant way. For instance, an agent could call an external API for virus scanning a file, or retrieve historical data from a database — Dapr abstracts these as invokable services. Floki ensures each agent’s actions (like a remediation step to block an IP) can be part of a larger, reliable workflow with error handling and retries, something a simple script-based agent might lack.

- General Research on Autonomous Agents: Beyond direct security use, Floki is a playground for any scenario involving multiple AI agents. This includes non-security tasks which can indirectly benefit security (such as coordinating AI for software QA or for analyzing large datasets). Its design emphasis on scalability means it’s suited for modeling real-world agent systems that run in cloud or edge environments, which is often a requirement for enterprise security solutions.

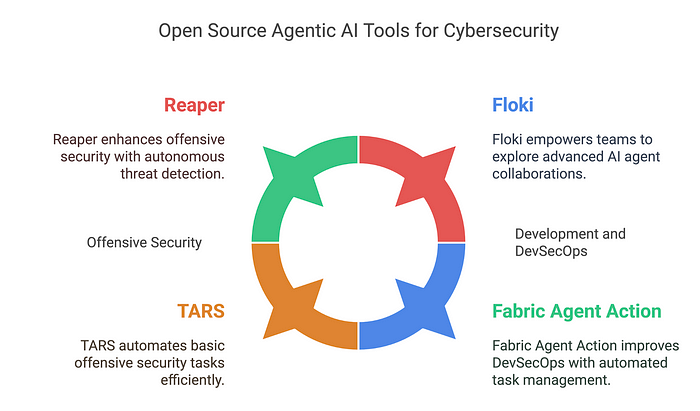

AI-agent driven cybersecurity tools are rapidly transitioning from concept to reality. Reaper and TARS show how offensive security can be enhanced by AI — from smart fuzzing of web apps to automated coordination of pentest tools — reducing manual drudgery and uncovering vulnerabilities with machine efficiency. Fabric Agent Action highlights that AI agents also have a place in our development and DevSecOps workflows, automating tasks and responses in code management platforms in a way that can improve security hygiene and developer productivity. At a more fundamental level, frameworks like Floki are empowering the community to explore what a team of AI agents can do together, laying the groundwork for autonomous threat hunting and defense systems that can operate at speed and scale beyond human capacity.